v1.3.6

版本发布时间: 2018-10-06 00:48:23

facebook/zstd最新发布版本:v1.5.6(2024-03-31 02:57:28)

Zstandard v1.3.6 release is focused on intensive dictionary compression for database scenarios.

This is a new environment we are experimenting. The success of dictionary compression on small data, of which databases tend to store plentiful, led to increased adoption, and we now see scenarios where literally thousands of dictionaries are being used simultaneously, with permanent generation or update of new dictionaries.

To face these new conditions, v1.3.6 brings a few improvements to the table :

- A brand new, faster dictionary builder, by @jenniferliu, under guidance from @terrelln. The new builder, named fastcover, is about 10x faster than our previous default generator, cover, while suffering only negligible accuracy losses (<1%). It's effectively an approximative version of cover, which throws away accuracy for the benefit of speed and memory. The new dictionary builder is so effective that it has become our new default dictionary builder (

--train). Slower but higher quality generator remains accessible using--train-covercommand.

Here is an example, using the "github user records" public dataset (about 10K records of about 1K each) :

| builder algorithm | generation time | compression ratio |

|---|---|---|

fast cover (v1.3.6 --train) |

0.9 s | x10.29 |

cover (v1.3.5 --train) |

10.1 s | x10.31 |

High accuracy fast cover (--train-fastcover) |

6.6 s | x10.65 |

High accuracy cover (--train-cover) |

50.5 s | x10.66 |

-

Faster dictionary decompression under memory pressure, when using thousands of dictionaries simultaneously. The new decoder is able to detect cold vs hot dictionary scenarios, and adds clever prefetching decisions to minimize memory latency. It typically improves decoding speed by ~+30% (vs v1.3.5).

-

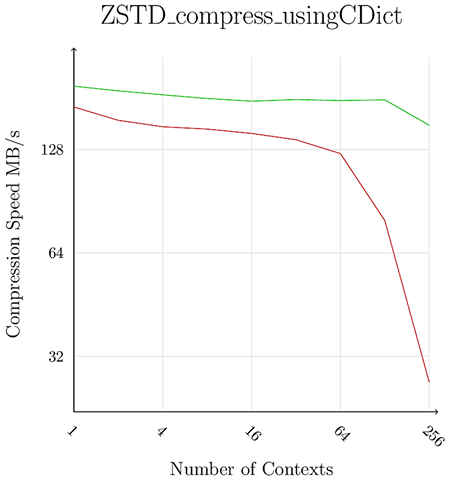

Faster dictionary compression under memory pressure, when using a lot of contexts simultaneously. The new design, by @felixhandte, reduces considerably memory usage when compressing small data with dictionaries, which is the main scenario found in databases. The sharp memory usage reduction makes it easier for CPU caches to manages multiple contexts in parallel. Speed gains scale with number of active contexts, as shown in the graph below :

Note that, in real-life environment, benefits are present even faster, since cpu caches tend to be used by multiple other process / threads at the same time, instead of being monopolized by a single synthetic benchmark.

Other noticeable improvements

A new command --adapt, makes it possible to pipe gigantic amount of data between servers (typically for backup scenarios), and let the compressor automatically adjust compression level based on perceived network conditions. When the network becomes slower, zstd will use available time to compress more, and accelerate again when bandwidth permit. It reduces the need to "pre-calibrate" speed and compression level, and is a good simplification for system administrators. It also results in gains for both dimensions (better compression ratio and better speed) compared to the more traditional "fixed" compression level strategy.

This is still early days for this feature, and we are eager to get feedback on its usages. We know it works better in fast bandwidth environments for example, as adaptation itself becomes slow when bandwidth is slow. This is something that will need to be improved. Nonetheless, in its current incarnation, --adapt already proves useful for several datacenter scenarios, which is why we are releasing it.

Advanced users will be please by the expansion of an existing tool, tests/paramgrill, which has been refined by @georgelu. This tool explores the space of advanced compression parameters, to find the best possible set of compression parameters for a given scenario. It takes as input a set of samples, and a set of constraints, and works its way towards better and better compression parameters respecting the constraints.

Example :

./paramgrill --optimize=cSpeed=50M dirToSamples/* # requires minimum compression speed of 50 MB/s

optimizing for dirToSamples/* - limit compression speed 50 MB/s

(...)

/* Level 5 */ { 20, 18, 18, 2, 5, 2,ZSTD_greedy , 0 }, /* R:3.147 at 75.7 MB/s - 567.5 MB/s */ # best level satisfying constraint

--zstd=windowLog=20,chainLog=18,hashLog=18,searchLog=2,searchLength=5,targetLength=2,strategy=3,forceAttachDict=0

(...)

/* Custom Level */ { 21, 16, 18, 2, 6, 0,ZSTD_lazy2 , 0 }, /* R:3.240 at 53.1 MB/s - 661.1 MB/s */ # best custom parameters found

--zstd=windowLog=21,chainLog=16,hashLog=18,searchLog=2,searchLength=6,targetLength=0,strategy=5,forceAttachDict=0 # associated command arguments, can be copy/pasted for `zstd`

Finally, documentation has been updated, to reflect wording adopted by IETF RFC 8478 (Zstandard Compression and the application/zstd Media Type).

Detailed changes list

- perf: much faster dictionary builder, by @jenniferliu

- perf: faster dictionary compression on small data when using multiple contexts, by @felixhandte

- perf: faster dictionary decompression when using a very large number of dictionaries simultaneously

- cli : fix : does no longer overwrite destination when source does not exist (#1082)

- cli : new command

--adapt, for automatic compression level adaptation - api : fix : block api can be streamed with > 4 GB, reported by @catid

- api : reduced

ZSTD_DDictsize by 2 KB - api : minimum negative compression level is defined, and can be queried using

ZSTD_minCLevel()(#1312). - build: support Haiku target, by @korli

- build: Read Legacy support is now limited to v0.5+ by default. Can be changed at compile time with macro

ZSTD_LEGACY_SUPPORT. - doc :

zstd_compression_format.mdupdated to match wording in IETF RFC 8478 - misc: tests/paramgrill, a parameter optimizer, by @GeorgeLu97

1、 zstd-1.3.6.tar.gz 1.73MB

2、 zstd-1.3.6.tar.gz.sha256 84B

3、 zstd-v1.3.6-win32.zip 1.17MB

4、 zstd-v1.3.6-win64.zip 1.25MB